Running a WooCommerce store involves balancing user experience with site performance and SEO. One overlooked yet powerful tool in this equation is the robots.txt file. If your store is experiencing high crawl rates from bots—especially on cart pages, filter links, and “add to cart” URLs—it could be harming your crawl budget and even leading to unnecessary server load.

An optimized robots.txt ensures that search engine bots focus only on your valuable pages (product pages, category pages, etc.) and avoid crawling parts of the site that have no SEO value or could cause issues with indexing.

✅ The Ideal Robots.txt Configuration

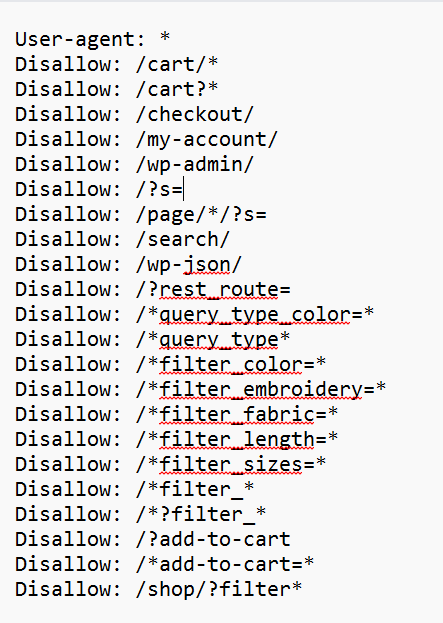

Here is a sample robots.txt file tailored for a WooCommerce store facing excessive crawling on non-SEO-friendly URLs

⚠️ Things to Keep in Mind

- This does not remove URLs already indexed – Use canonical tags and noindex meta tags for that.

- It won’t stop malicious bots – For that, consider firewalls or bot filtering at server level.

- Test your file – Use Google Search Console’s URL inspection and robots.txt tester.

Final Thoughts

If you’re running a WooCommerce store, your robots.txt file shouldn’t be an afterthought. Blocking low-value and sensitive URLs can significantly improve your SEO hygiene, server performance, and crawl efficiency. With bots staying away from the wrong pages, your real content—product listings, categories, and blogs—gets the attention it deserves.